What to know

By using the information in this chapter, you will be able to plan, conduct, and integrate activities to evaluate your program’s EHDI-IS. The findings from the evaluation can be used to ensure that your EHDI-IS serves as an effective tool in identifying and providing services to deaf and hard of hearing infants and children.

Chapter objectives

This chapter will help you to

- Understand the importance of a EHDI-IS evaluation;

- Identify the key steps for developing and implementing evaluation plans; and

- Understand the difference between monitoring and evaluation.

The practical use of program evaluation among EHDI programs

The goal of this chapter is to help strengthen understanding about how staff can use evaluation to monitor and assess their program's EHDI Information System (EHDI-IS) and integrate this into routine program practice. This chapter will illustrate how to plan evaluation activities, regardless of the type and stage of development of the system in place. It is meant to serve as a resource to assist those who are responsible for conducting the evaluation and any stakeholders who have an interest in the evaluation results. This chapter is based on the CDC's Framework for Program Evaluation and the Updated Guidelines for Evaluating Public Health Surveillance Systems.

Why evaluate your EHDI-IS?

Evaluation is essential to public health in order to improve programs and provide accountability to policy makers and other stakeholders. Comprehensive guidelines for Evaluating Public Health Surveillance Systems were published in CDC's Morbidity and Mortality Weekly Report (MMWR) (July 27, 2001). This MMWR was developed to promote the best use of public health resources by developing efficient and effective public health surveillance systems.

Integrating evaluation into the routine activities for the EHDI-IS can help ensure that

- The data collected and documented are of high quality and describe the true characteristics of the newborn's hearing screening, diagnostic, and early intervention status. This results in data that are accurate, complete, consistent, on time, unique, and valid.

- Each jurisdictional EHDI-IS has the acceptability, flexibility, simplicity, and stability needed to collect data, and the system can be operated by both users and reporters.

- Each jurisdiction has a useful EHDI-IS in place to support: (a) tracking of infants and young children throughout the EHDI process; and (b) connecting deaf and hard of hearing infants and young children with services they need.

- Resources to support and maintain EHDI-IS are being used effectively.

Evaluation

Program evaluation is "the systematic collection of information about the activities, characteristics, and outcomes of programs to make judgments about the program, improve program effectiveness, and/or inform decisions about future program development."

Evaluation can take many different directions, but it is always responsive to the program's needs as well as those of its stakeholders and resources.

Purpose of the evaluation

Through evaluation, team members can identify what is working well or poorly, whether the objectives are being achieved, and provide evidence for recommendations about what can be changed to help the system better meet its intended goals. Using the evaluation results and sharing lessons learned will lead EHDI programs to improve upon their success.

It is a common misconception that evaluation is something that you plan and conduct at the end of a project. Evaluations are designed to do one of two things or a combination of both: either to improve aspects of your system or to prove or show that the system is reaching its intended outcomes. Establishing a clear purpose of the evaluation from the beginning will reduce misunderstandings and aid consideration of how the evaluation findings will be used.

Often an evaluation is conducted because the funding agencies require it, or because of program staff's and jurisdiction stakeholders' interests. In both cases the evaluation purpose may want to include the following information:

- What does this evaluation strive to achieve?

- What is the purpose of this evaluation?

- How will findings from the evaluation be used?

Planning for your evaluation, where to start?

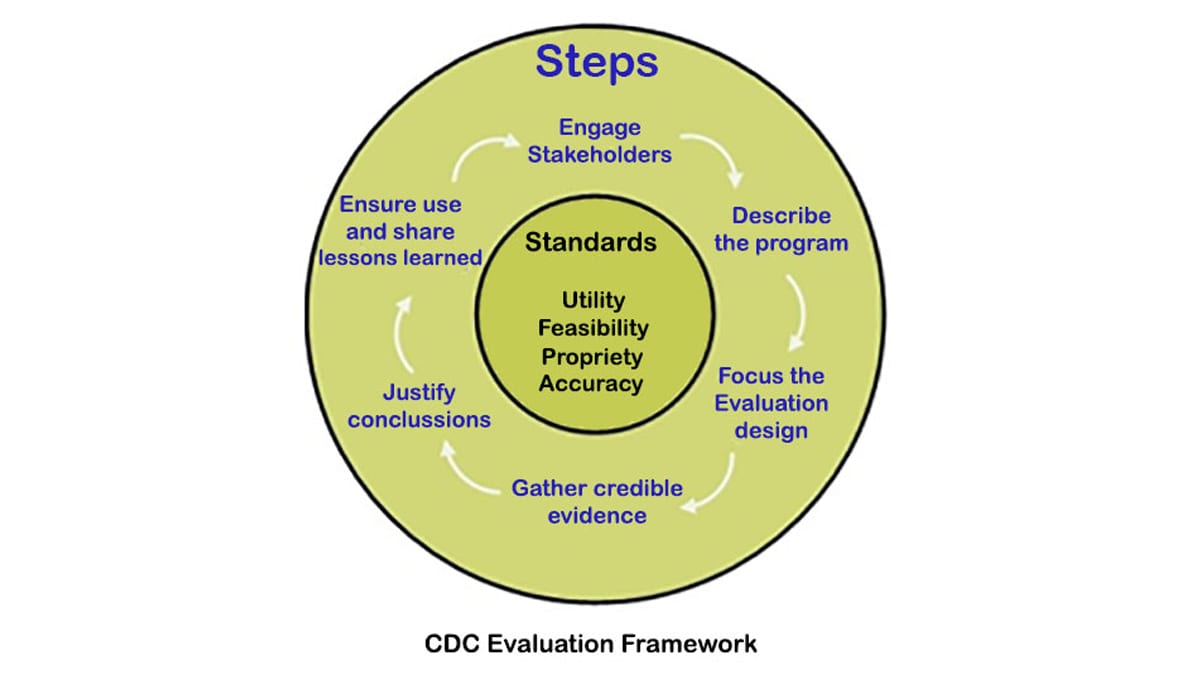

A practical guide to the evaluation process, using real-world examples from the EHDI-IS, is titled, Planning the Evaluation of your EHDI-IS. [PDF – 518KB] Regardless of the purpose of the evaluation, there are critical steps to follow to be appropriately responsive. In this chapter, evaluation of an EHDI-IS is divided into six steps:

- Engage stakeholders

- Describe the program

- Focus the evaluation design

- Gather credible evidence

- Justify conclusions

- Ensure use and share lessons learned

1. Engage your stakeholders

The initial steps in evaluation are the formation of an evaluation team and engagement of stakeholders. Evaluation stakeholders are those who have an interest in the evaluation and may use its results in some way (those involved, those affected, and primary intended users of evaluation). Conducting an evaluation of an EHDI-IS requires both knowledge of evaluation and an in-depth understanding of EHDI programs. Jurisdictional EHDI program staff and evaluators will be working together to produce evaluations that best fit their program and answer the evaluation questions with credible evidence.

The preferred approach is to have the evaluation team include internal EHDI staff, external stakeholders, and possibly consultants or contractors with evaluation expertise. An evaluation leader should engage stakeholders, coordinate the team, and maintain continuity throughout the process.

Why stakeholders?

Stakeholders can be extremely valuable throughout the evaluation process and it is important to engage them early and often, especially in the planning stages of the evaluation. Misunderstanding about evaluation and barriers to the productive use of evaluation findings can be avoided or reduced when program stakeholders are included in key discussions at various points throughout the evaluation's lifecycle.

The involvement of stakeholders is also important to an evaluation because this

- Ensures transparency;

- Increases the quality, scope, and depth of questions;

- Facilitates the evaluation process;

- Acknowledges the political context of the evaluation;

- Builds evaluation capacity; and

- Fosters relationships and collaboration.

The EHDI system involves multiple stakeholders, including hospitals, audiologists, medical homes, early intervention programs, and state health departments. Some of the stakeholders may be relevant to the evaluation of the EHDI-IS in your jurisdiction and some may not. Only you and your program staff can decide which stakeholders should be involved, depending on the type, and stage of the evaluation.

How to engage stakeholders in evaluation?

- Invite stakeholders to discuss evaluation questions and the evaluation's purpose.

- Discuss with stakeholders what evidence would be considered credible and any potential limitations of the evaluation.

- Engage stakeholders (e.g., through meetings, teleconferences, phone calls, and e-mails).

- Keep stakeholders informed about the progress of the evaluation.

- Include stakeholders in developing recommendations for your program based on the evaluation findings.

Team members and stakeholders too busy for evaluation?

- Good evaluation requires a combination of skills rarely found in a single person. The good news is everyone will not need to commit the same amount of time. You can invite stakeholders into key discussions at various points throughout the lifecycle of an evaluation. Often a small evaluation team involved and engaged in the evaluation process can be an effective way to keep the process running.

When key stakeholders have been identified, the next step is to provide them with a description of the EHDI Information System being evaluated that is inclusive of all system components.

2. Describe your EHDI-IS

The second step in CDC's evaluation framework is to describe your EHDI-IS. This very important step ensures that program staff, the evaluator, and other stakeholders share a clear understanding of what the EHDI-IS entails and how the system is supposed to work.

You have the opportunity to describe

- The need for the EHDI-IS;

- The stage of development;

- The resources used to operate it;

- The strategies and activities you believe lead to its development and maintenance; and

- The social and political context in which the system is implemented.

Once all the components of the system have been identified, a graphic representation may help to summarize the relationships among those components.

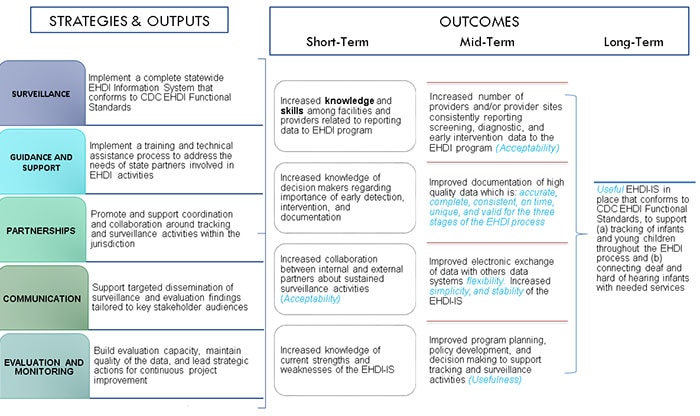

Describing your information system using logic models

A logic model is a simplified graphic representation of your system. Logic models are common tools that EHDI staff can use for planning, implementation, evaluation, and communication purposes. Developing a logic model can help gain clarity on the relationship between activities and their intended outcomes. A logic model includes four components: inputs, strategies or activities, outputs, and outcomes.

Inputs

Inputs are the resources that go into the system and help to determine the number and type of activities that can be reasonably implemented during the project. These are the people (usually from inside and outside the program), budget, infrastructure, and information needed. During the planning stage, the lists of resources are essential to deciding the type of activities and strategies the program will be able to implement.

Activities

Activities are the actual events or actions that take place as a part of the EHDI-IS, such as:

- Developing, maintaining, and upgrading the data system;

- Providing technical assistance to data reporting sources;

- Collecting data from data sources;

- Promoting and supporting coordination around tracking and surveillance activities;

- Supporting targeted dissemination of surveillance data; and

- Developing and conducting evaluation activities.

Outputs

Outputs are the direct products of the program components' activities, such as:

- Data reports;

- Protocols in place;

- Number of trainings addressed to data reporting sources;

- Number of meetings with partners; and

- Data sharing agreements in place.

Outcomes

Outcomes are the intended effects of the program's activities. They are the changes you want to occur or things you want to maintain in your surveillance system. These changes can be expressed as short– and intermediate– and long–term outcomes. All outcomes must indicate the direction of the desired change (i.e., increase, decrease, and maintain).

Short–term outcomes

Short–term outcomes are the immediate effects of your program component, such as:

- Increased knowledge among decision makers after dissemination of key information;

- Increased skills among data reporters after the implementation of training; and

- Reduced numbers of errors after examining data queries.

Mid–term outcomes

These are the intended effects of your program components that take longer to occur. Examples include,

- Improved timeliness in the collection of screening data after the implementation of a new Web data reporting system;

- Improved electronic exchange of data with Vital Records after data sharing agreements are in place;

- Increased collaborations and reporting by providers after meetings and trainings; and

- Improved program planning after evaluation results are disseminated.

Long–term outcomes

Long-term outcomes are the intended effects of your program component that may take several years to achieve. Examples include,

- Improved surveillance of infants and young children throughout the EHDI process;

- Improved documentation of early intervention services outcomes;

- Development and implementation of revised or new policies; and

- Implementation of a useful EHDI-IS that conforms to CDC EHDI Functional Standards and serves as a tool to help programs ensure all deaf and hard of hearing infants are identified early and can receive intervention services.

After you have decided on the various components of your logic model, arrange them in a way that reflects how your program operates. Examine the model carefully. Does each step logically relate to the other? Are there missing steps that disrupt the logic of the model? It is important to remember that logic models are living documents, which can change over time with improvements to the system, changes in resources, or modifications made to the program.

Enhancing EHDI-IS performance with logic models

INPUTS: Infrastructure, funding, staff, guidance and support, stakeholders, information system

What attributes should be considered when evaluating the EHDI-IS?

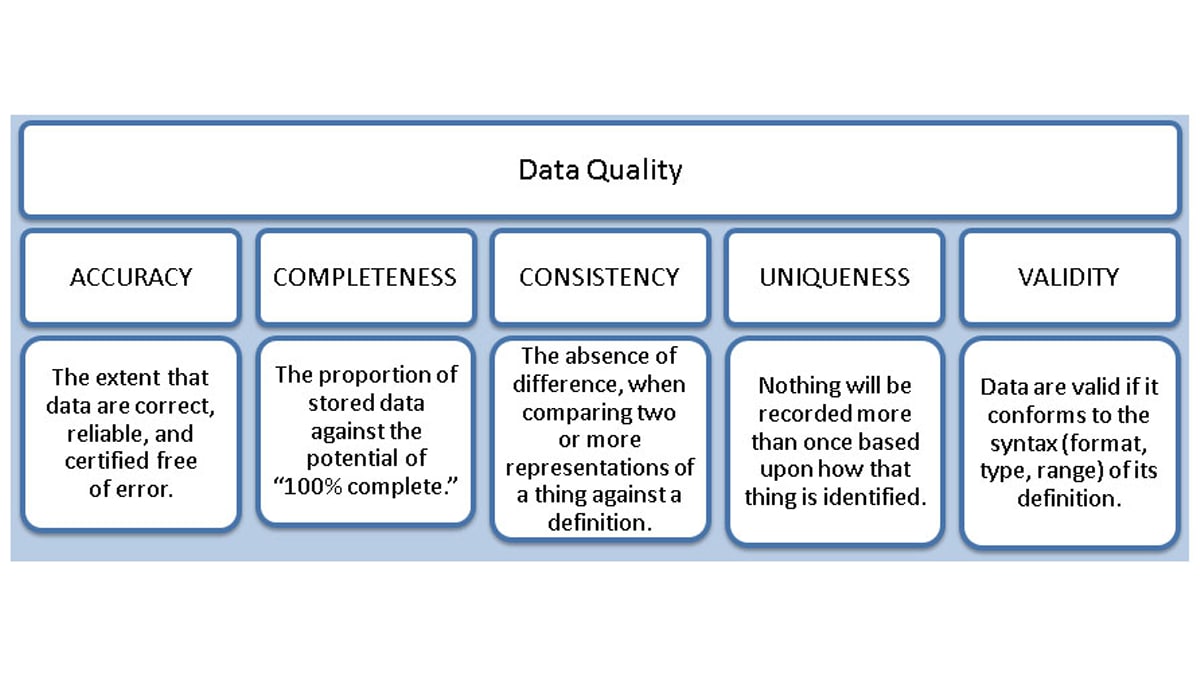

The evaluation of public health surveillance systems involves an assessment of system attributes. As noted in blue in the above logic model, the more important attributes for the EHDI-IS are: data quality, timeliness, acceptability, simplicity, flexibility, sensitivity, stability, and usefulness.

The graphic below provides a list of data quality attributes (dimensions) that state EHDI programs can choose to adopt when looking to assess the quality of the data in the EHDI-IS. It is not a rigid list. The use of the dimensions will vary depending on the requirements of individual EHDI jurisdictions.

A complete description of the dimensions of EHDI Data Quality Assessment can be found the document titled The Six Dimensions of EHDI Data Quality Assessment [PDF – 195 KB]4

When evaluating data quality, consider including the following evaluation questions:

- Is the EHDI-IS able to document complete, accurate, unique, consistent, and valid screening and diagnostic data on all occurrent births in the state?

- Which factors are affecting the quality of the data?

- Is the system able to identify and monitor system errors?

- What changes in the system need to be implemented?

This graphic provides a list of attributes that jurisdiction EHDI programs can choose to adopt when assessing the EHDI-IS and potential evaluation questions per attribute.

ATTRIBUTES FOR THE EHDI-IS

Acceptability

The willingness of persons and organizations to participate in the EHDI information system.

To what extent do audiologists in the state know about the jurisdictional EHDI program and use the EHDI data reporting module system? What barriers prevent audiologist from reporting diagnostic data?

Flexibility

EHDI-IS can adapt to changing information needs or operating conditions with little additional time, personnel, or allocated funds.

How flexible is the EHDI-IS to electronically interchange data with other data systems with little additional time, personnel, or allocated funds?

Simplicity

Refers to both its structure and ease of operation. EHDI-IS should be as simple as possible while still meeting their objectives.

How easy is it to use the EHDI-IS for managing data and generating reports?

Stability

Reliability (i.e., the ability to collect, manage, and provide data properly without failure) and availability (to be operational when it is needed).

How well is the EHDI-IS consistently operation? What is the frequency of outages?

Usefulness

EHDI-IS contributes to early identification of hearing loss and connects deaf and hard of hearing babies with desired services; provides understanding of hearing loss implications; contribu-tes performance measures for accountability.

How does the EHDI-IS contribute to early identification of hearing loss and connects deaf and hard of hearing babies and very young children with services needed?

The following evaluation questions can be considered when assessing

Acceptability

- To what extent are hospital screening staff complying with the established protocols?

- What additional trainings are needed?

- Did the training meet the attendees' needs?

- What additional trainings are needed?

- To what extent do audiologists in the state know about the EHDI program and use the EHDI-IS system?

- How could the EHDI program better educate audiologists about using the EHDI-IS system?

- What barriers prevent audiologists from reporting diagnostic data?

- How could the EHDI program better increase awareness among audiologists about the importance of documenting and communicating results?

- What additional stakeholders should the EHDI program engage to improve documentation of diagnostic and early intervention data?

Flexibility

- How flexible is the EHDI-IS to electronically interchange data with other data systems?

- Does the EHDI-IS have the ability to shift from hearing loss tracking to surveillance (explained below).

- Can your system meet changing detection needs?

- Can it add unique data?

- Can it capture other relevant data?

- Can it add providers/users to increase capacity?

- Can it add unique data?

Simplicity

- How easy is it to use the EHDI-IS for managing data and generating reports?

- Do you have the ability to generate new reports with minimal effort (e.g., without having to submit a request to a vendor or your IT department)?

- What downtime is needed for servicing/updating?

Stability

- Is the EHDI-IS consistently operating?

- What is the frequency of outages?

Usefulness – Did the collected EHDI data lead to strategic actions that benefitted the program or stakeholders?

- Did the EHDI-IS contribute to the early identification of deaf and hard of hearing infants and connecting them with intervention services?

- Does the EHDI-IS support policy development, program planning, and delivery of services?

3. Focus your evaluation design

After completing Steps 1 and 2, you and your stakeholders should have a clear understanding of your EHDI-IS. The evaluation team then decides the evaluation design, which will be determined by several elements: the type of evaluation (i.e., process evaluation or outcome evaluation), the questions it wants to answer, and the evaluation's purpose.

Process evaluation or outcome evaluation

There are two basic types of evaluation: process evaluation and outcome evaluation. Process evaluation focuses on examining the implementation of the system, determining whether activities are being implemented as planned, and whether the inputs and resources are being used effectively. Outcome evaluation focuses on showing whether or not a program component is achieving the desired changes.

Examples of process evaluation questions include

- To what extent are all data reporters willing to participate and report data in a timely manner to EHDI-IS?

- In what ways may the existing information technology (IT) infrastructure be improved for better data collection and management?

- To what extent are hospitals' screening staff complying with the established protocols?

- What additional trainings are needed?

- Did the training meet the attendees' needs?

- Did the training meet the attendees' needs?

- What are the most important causes of loss to follow–up in the state?

Examples of outcome evaluation questions include

- Is the number of active users increasing over time as more facilities are trained?

- Did the disseminated surveillance data lead to strategic actions that benefitted the program stakeholders?

- Did the disseminated surveillance data lead to strategic actions that benefitted the data users connecting deaf and hard of hearing babies with services they need?

When we examine our programs, the perception that each component and process is necessary for the larger whole may make it difficult to decide what to evaluate. However, resources are rarely available to address all aspects of a program. Therefore, it is necessary to establish priorities to make final decisions about what specific evaluation questions the evaluation team will answer and how.

There are many methods, strategies, and tools that can help with prioritizing evaluation questions. Regardless of the method selected, utility and feasibility are two important evaluation standards to keep in mind. An example of prioritization can be found in the document: Planning an Evaluation of an EHDI Information System, page 2 [PDF – 519 KB].

The evaluation team may consider asking the following questions and be comfortable with the answers before beginning the evaluation.

- Who will use the evaluation findings?

- How will the findings be used?

- How feasible is this evaluation?

- Can it be done with available resources and within the available timeframe?

Evaluation design

Now that you have selected the evaluation questions to answer and you have a clear evaluation purpose, it is time to think about the evaluation design. The evaluation design depends on the purpose of the evaluation. A design (indicators, data collection methods, data collection sources) is used to structure the evaluation and show how all of the major parts of the evaluation project work together to address the evaluation questions.

There are three overarching types of evaluation designs: experimental, quasi-experimental, and nonexperimental. For many surveillance evaluations, you will find that a simple, nonexperimental design is an appropriate evaluation design. However, other evaluation designs may be used depending on the question you intend to answer. In this chapter, we emphasize nonexperimental designs and some quasi-experimental designs.

Nonexperimental designs, also known as observational or descriptive designs

Nonexperimental designs include case study and post-test only designs. In these designs, there is no randomization of participants to conditions, no comparison group, and no multiple measurements of the same factors over the time. For example,

- Evaluation teams may use surveys to assess the level of stakeholders' satisfaction with the data and data dissemination.

- Evaluation teams may interview audiologists to assess whether the electronic reporting process or paper reporting form are user friendly.

Quasi-experimental design

Quasi-experimental designs are characterized by the use of one or both of the following: 1) the collection of the same data at multiple points in time, or 2) the use of a comparison group. Many designs fall under this heading, including but not limited to:

- Pre-post tests without a comparison group;

- A nonequivalent comparison group design with a pre-post test or post-test only;

- Interrupted time series; and

- Regression discontinuity.

For example,

- Evaluation teams might use data reports to determine if the number of audiologists reporting EHDI data is increasing over time after the implementation of training (interrupted time series before and after training).

- Evaluation teams may use pre– and post–test to assess how effective the training was in increasing knowledge of the data reporting process.

Indicators and performance measures

Indicators and performance measures are specific, observable, and measurable characteristics or changes that show the program's progress toward achieving an objective or specified outcome. Indicators are tied to the objectives identified in the program's description, the logic model, and/or the evaluation questions. The indicator must be clear and specific about what it will measure.

The evaluation staff must decide which data collection, management, and analysis strategies are most appropriate for each indicator and whether needed technical assistance for implementation is available and affordable.

When conducting outcome evaluations, there are some common standard indicators for EHDI programs (e.g., LFU/LTD for diagnosis, etc.). Consider including some of them to show progress toward achieving specific outcomes.

4. Gather credible evidence

The information you gather in your evaluation must be reliable and credible for those who will use the evaluation findings. In this step, you will work with your stakeholders to identify the data collection methods and sources you will use to answer your evaluation questions.

The following defines what constitutes credible evidence for stakeholders to specify:

- Quantity: What amount of information is sufficient?

- Quality: Is the information trustworthy (i.e., reliable, valid, and informative for the intended uses)?; and

- Context: What information is considered valid and reliable by the stakeholders?

For a small group of participants or a short survey, the data can be manually analyzed or you can use various free online tools. When you are collecting a large amount of qualitative data, you will likely need to use a tool or specialized software to support the analysis and management of this information.

Different techniques are used to analyze qualitative data, but they all require skills and knowledge. Essentially, the data collected will be classified by categories and themes, and codes should be created to facilitate the analysis.

If you plan to collect a large amount of qualitative data, it is helpful to have the support of experts with backgrounds in evaluation and behavioral and social sciences or who have knowledge in these areas.

5. Justify conclusions

After analyzing your data, the next step is to examine your results and determine what the evaluation findings "say" about your EHDI-IS. This involves linking all the findings to the evaluation questions and telling your program's story. Keep your audience in mind when preparing the report. What do they need and want to know? Also, keep in mind that the findings are the basis for developing recommendations for program improvement.

Recommended elements for the evaluation report include

- Comparison of actual with intended outcomes;

- Comparison of program outcomes with those of previous years. Use existing standards as a starting point for comparisons; and

- Limitations of the evaluation, such as

- Possible biases;

- Validity of results;

- Reliability of results; and

- Generalizability of results.

- Possible biases;

Although a final evaluation report is important, it is not the only way to distribute findings. Depending on your audience and budget, you can consider different ways of delivering evaluation findings. Additional information on reporting evaluation findings can be found in the document titled Evaluation Reporting: A Guide to Help Ensure Use of Evaluation Findings. [PDF – 550KB]

6. Ensure use and share lessons learned

Always ask the question "so what?" at the end of the evaluation. This is important because the purpose of program evaluation is to improve programs. Therefore, the evaluation results can help modify aspects of your EHDI-IS, strengthen current activities, and/or change elements that may not be working.

An important part of this process is when people in the real world, like you, apply the evaluation findings and experiences to their work. This is also the time to share the lessons learned with all of your stakeholders who have a vested interest in achieving the EHDI goals.

Monitoring versus evaluation

This final section describes the difference between the monitoring process and program evaluation. While these are complementary and interrelated components of program assessment they differ in structure and outcomes.

Monitoring

Program monitoring is part of the evaluation process and provides a core data source to build a program evaluation. While monitoring tracks implementation progress, evaluation examines the factors that contribute to the success or failure to understand why a program may or may not be working. Evaluation activities build on the data routinely collected in ongoing monitoring activities.

We can define the monitoring process as the observation and recording of multiple activities within the system to help staff identify problems with program operation. EHDI program staff can implement monitoring processes using their EHDI-IS as an "early warning system" to identify and address data issues at several steps (hearing screening, diagnostic assessment, and enrollment in early intervention) in meeting the EHDI 1-3-6 benchmarks.

The number and frequency of the monitoring process activities are decided by the jurisdiction and depends on the area of the assessment. For example, those monitoring processes done to assess the completeness of the data submitted by hospitals may be performed on a daily or weekly basis. While other processes to identify duplicates entries in records, data errors, or missing data may be performed on a monthly basis. Each EHDI-IS has the ability to be queried and provide ad hoc reports, which are useful when developing monitoring processes, and vice versa.

Program managers may want to consider assigning the person in charge of the evaluation (e.g., data managers, epidemiologists, or someone hired externally) to run those reports and be prepared to effectively manage any unexpected situation with the collection and documentation of EHDI data.

For example: A state EHDI program implements a monitoring process examining ad hoc hospital reports over the past 6 months, and notices that a particular hospital performance appears to be consistently worse than the rest. From January to June, the hospital xyz miss rate consistently ranges from 8% to 10%, when the average should be 3% to 5%. This monitoring process provides a core data source to build a program evaluation. Later, staff can frame observations into evaluation questions, such as how is hospital xyz's process different from other hospitals? Is the high miss rate contributed largely by the hospital's intensive care unit or the well-baby unit?